The final Ashes test of this summer has just started, a welcome distraction, no doubt, for some of those academics holed up preparing REF submissions (see Athene Donald’s recent post to get a feel for how time consuming this is, and the comments under it for a very thoughtful discussion of the issues I’m covering here). It also provides the perfect excuse for me to release another convoluted analogy, this time regarding the approaches taken in test match cricket and in academic science to measuring the intangible.

Anyone who follows sport to any extent will know how armchair and professional pundits alike love to stir up controversy, and much of that generated in this current series has revolved around the Decision Review System (DRS) - the use of television technology to review umpires’ decisions. Of course, TV technology is now used in many sports to check what has actually happened, and that is part of its role in cricket - Did a bat cross a line? Did the ball hit bat or leg? Whilst sports fans will still argue over what these images show, at least they are showing something.

Cricket, however, has taken technology a step further. In particular, one of the ways that a batsman can get out in cricket is LBW (leg before wicket), where (in essence) the umpire judges that, had it not hit the batsman’s leg, the ball would have gone on to hit the wicket. LBWs have been a source of bitter disputes since time immemorial, based as they are on supposition about what might have happened rather than anything that actually did. The application of the DRS was meant to resolve this controversy once and for all, using the ball-tracking technology hawk-eye to predict exactly (or so it seems) the hypothetical trajectory of the ball post-pad.

Of course, sports being sports, the technology has simply aggravated matters, as ex-players and commentators loudly question its accuracy. But, as well demonstrating how poorly many people grasp uncertainty (not helped by the illusion of precision presented by the TV representation of the ball-tracking), it struck me that there are parallels here with how we measure the quality of scientific output.

First and foremost, in both situations there is no truth. The ball never passed the pad; quality is a nebulous and subjective idea.

But perhaps more subtely, we also see the bastion of expert judgement (the umpire, the REF panel) chellenged by the promise of a quick techno-fix (hawkeye, various metrics).

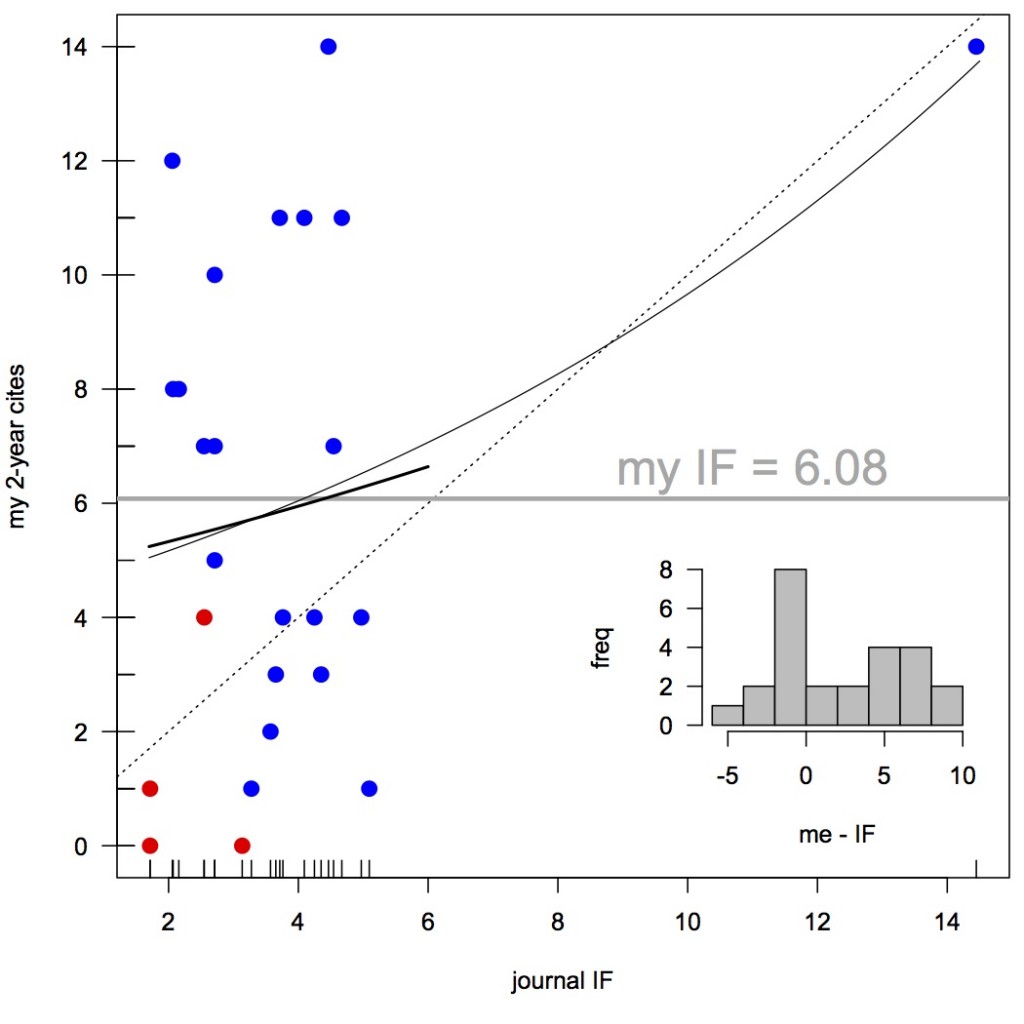

What has happened in cricket is that hawk-eye has increasingly been seen as ‘the truth’, with umpires judged on how well they agree with technology. I would argue that citations are the equivalent, at least as far as scientific papers go. Metrics or judgements that don’t correlate with citations are considered worthless. For instance, much of the criticism of journal Impact Factors is that they say little about the citation rates of individual papers. This is certainly true, but it also implicitly assumes that citations are a better measure of worth than the expert judgement of editors, reviewers, and authors (in choosing where to submit). Now this may very well be the case (although I have heard the opposite argued); the point is, it’s an assumption, and we can probably all think of papers that we feel ought to have been better (or less well) cited. As a thought experiment, rank your own papers by how good you think they are; I’ll bet there’s a positive correlation with their actual citation rates, but I’ll also bet it’s <1. (You could also do the same with journal IF, if you dare…)

So, we’re stuck in the situation of trying to measure something that we cannot easily define, or (in the case of predicting future impact) which hasn’t even happened yet, and may never do so. But the important thing is to have some agreement. If everyone agrees to trust hawk-eye, then it becomes truth (for one specific purpose). If everyone agrees to replace expensive, arduous subjective review for the REF with a metric-based approach, that becomes truth too. This is a scary prospect in many ways, but it would at least free up an enormous amount of time currently spent assessing science, to actually doing it (or at least, to chasing the metrics…)